Generative Subtitles: Speech to Understanding

03 Sep 2024

8 mins read

8 mins read

Last year, I wrote a blog about video localization. A year is an awfully long time in technology – actually, it’s an awfully long time for anything. In the last 12 months, Cape Verde was the first sub-Saharan country to be declared malaria-free in 50 years by the WHO. Queen Margrethe of Denmark abdicated the throne, leaving no reigning female sovereigns in the world. Japan became the fifth country to land a spacecraft on the moon. And TikTok became the third most downloaded social media platform in the world.

It seems that no matter what happens, we continue to record videos about it. Statista tells us that media content is expected to generate US$1.6 trillion in revenue in 2024 and will continue growing. Consumers are getting more and more used to receiving information in snackable videos, or having any movie they desire at their fingertips. We also work around the clock by recording meetings (Life Pro Tip: I’ve discovered the amazing timesaving trick of skipping meetings where I don’t have anything to contribute, and then watching the recordings at 1.5x speed later).

At the same time, AI technology is advancing in leaps and bounds. I’m still waiting for it to do my household chores – when will we get a Roomba that can vacuum stairs? – but in the meantime, we can continue to develop new ways to increase productivity, increase quality, and save money.

Human expertise continues to gain value as AI is poised to take over our more menial tasks. But that means video localization continues to be a very expensive, specialized, field. Why is that? Well, let me cheat and copy directly from last year’s blog:

- It’s very complex to do. There are specialty applications built to let professionals do this work.

- Subtitles are difficult to translate. There are constraints around the length of the text, the text has to fit to the video, sentences are cut off because of pauses or scene changes… the list goes on. There are good reasons why this is typically handled by professionals.

- Automatic translation doesn’t cope well with segment fragments, but subtitles have to match what you see on screen in a video.

- Quality Assurance is time consuming. You need to view, listen, and read again and again… and again… through the whole video in all languages.

- A millisecond counts. Viewers will notice if the subtitle is offset to the actual speech.

When the next season of Squid Games comes out, I want those subtitles to be as close to perfect as possible – please, Netflix, make sure professional linguists are involved! But, for that webinar I missed, I need to understand the content, without any heart stopping Red Light / Green Light scenes. Unfortunately, with the high cost of video localization, those webinars aren’t run with big budgets, and so they typically aren’t subtitled or localized… until now!

Introducing the new Trados Generative Subtitles capability

With Generative Subtitles you can quickly create a cloud translation project for your video (using the customer portal, or a built-in connector) and then watch with awe as the subtitles are automatically generated, respecting industry best practices. Add in a custom prompt, to ensure your name is spelled correctly when that emcee with the sexy accent introduced you in the video.

Fantastic, right? There’s half the problem solved. But localization is definitely a challenge too. Or, I should say, localization WAS a challenge too!

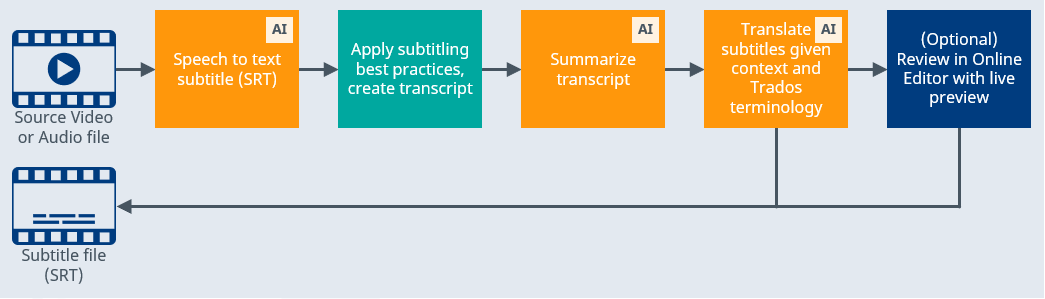

What if instead of translating segment snippets, we now take that subtitle file, and use an LLM to translate it? And send that LLM a summary of your video so it has context to work with? And even send it your terminology at the same time?

You guessed it – you get quality localization of those subtitles! See how it works:

Wait. That last step ‘review in online editor with live preview’… you noticed it, didn’t you. Very clever. Well, often, for those webinars, training videos, and more, fully automated is good enough. But my boss was mumbling when he recorded his instructions, and I need to make him look good. So, let’s look at what that looks like.

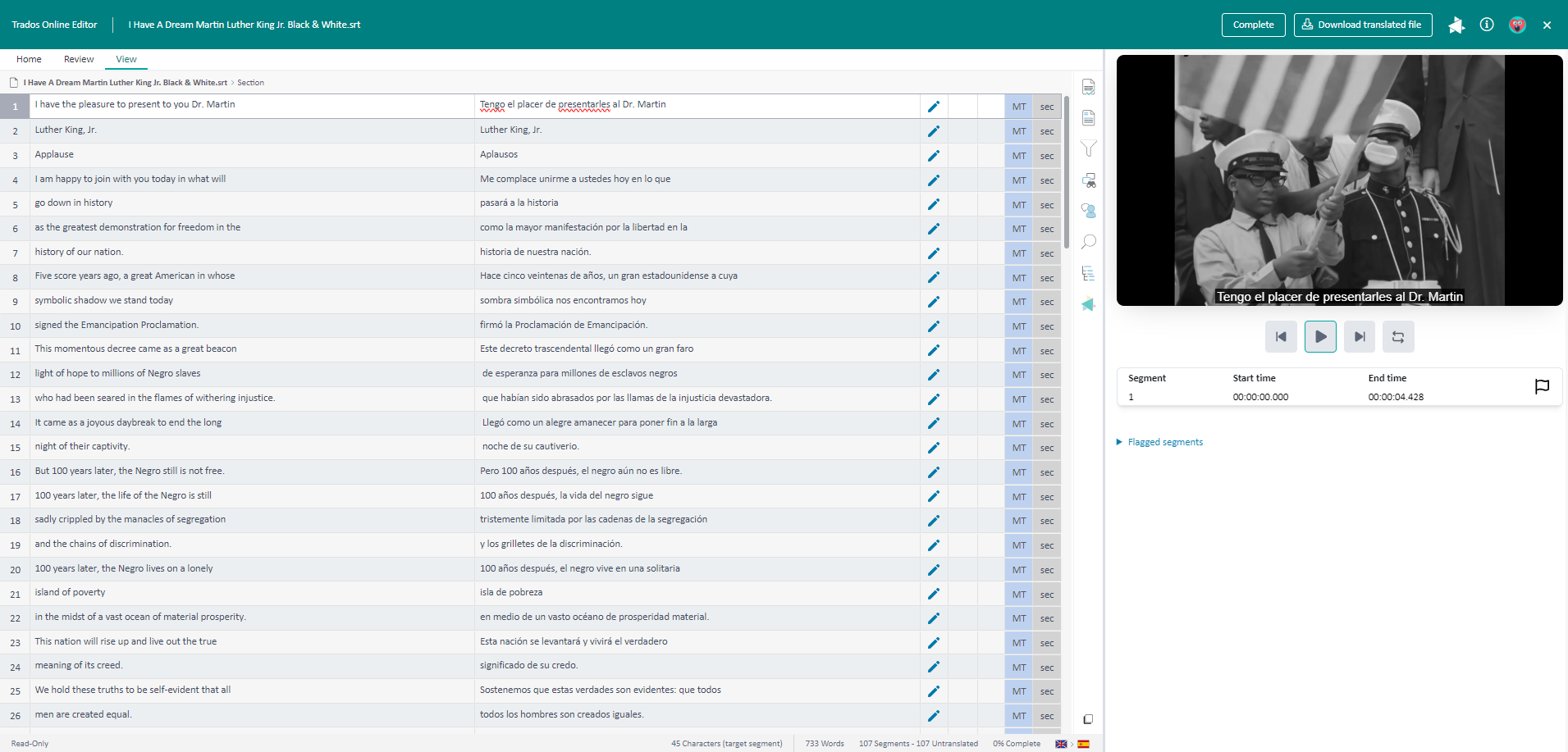

Screenshot of in-context preview in action in the online editor

Watch the magic as the Online Editor text scrolls through while the video plays, with sound. See your subtitles change in real time as you edit that text. Flag segments so you can come back later and ask your boss, what on earth were you thinking? Or – if you’re reading this Matt (my boss) – I meant, so you can come back later and congratulate your boss on his deep insights.

I know by now you’re wondering; this sounds too good to be true! Am I dreaming?

If you’re hearing this in my voice and seeing subtitles popping up under my head, the answer is... probably YES! But if not, let’s talk about all the usual stuff.

- Security and privacy? Check! The LLMs used in this fabulous solution are hosted within the RWS Language Cloud estate. Your data never becomes public.

- Workflow automation? Check! This uses your standard Trados Cloud environment with project templates, workflow templates, task assignments, etc. Drop in those videos and go have a coffee.

- Reporting? Check! These are projects like any other. We’ll continue to add new data for tracking purposes but rest assured that you’ll be able to show off your skyrocketing productivity.

- This is amazing! By now you’re probably asking, dear Trados, what can’t you do?

Well, Deadpool and Wolverine won’t be subtitled using Trados alone. Our friends at Marvel should likely be using a dedicated solution alongside it, like one of our partner’s solutions such as CaptionHub, that allows them to change timestamps, move subtitles around the screen, and translate Deadpool’s colorful language more colloquially.

Want to see the results? Our Elevate videos were subtitled and localized using this solution.

Want to see this in practice? Reach out to your account manager to schedule a demo… and be sure to bring popcorn, since multimedia will become your new favorite file type.